Sometimes you run across something and you figure, that won’t ever happen again. Then it does, repeatedly. You are then reminded of the fact that any random event is possible when presented with enough opportunities to occur.

Well, I’ve been living that world for a while and I think I got to the root of the problem, a bug in Documentum. Not just any bug (or design constraint), but one that requires high-throughput and a little luck to reproduce. The existence of a bug really isn’t the issue, all large systems have them. It is the journey to discovery that is the “fun” part.

Creating a Problem Through Solving Another

On one of my projects, we ingest lots of documents. They are organized by a unique numerical identifier. While we didn’t need to technically put them into multiple folders, we decided we should do so in order to simply things for those users that wanted to browse to the records. This also helped to prevent Documentum from having a conniption when we queried for the contents of a folder with a million or so items.

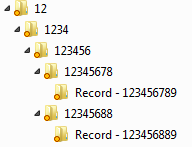

The next question was, “What kind of structure?” Well, it needed to be simple. To illustrate, I’ll provide you an example using Social Security Numbers, though that is not the number in question.

We could have broken it by any number, but 100 items per level seemed reasonable. Besides, in theory, who would really care?

Documentum, that’s who.

The problem arose when we had two concurrent processes trying to create Records 123456789 and 123456889. They are in the same folder structure for several levels. As missing folders were identified, each process tried to create a folder. This created locks and failures.

This was bad.

So we came-up with a two-fold solution. The first was to pre-create the first two levels in the repository, about 10,000 folders. That took care of some of the immediate pain. On some high-density numbers, we went a layer deeper. The second part of the solution was to create a retry timer on the creation of a folder if it failed.

We rolled that out a year and a half ago and everything seemed okay. We were wrong.

The Existential Crisis

What ended up happening was that when the concurrent creation was attempted. Using the above structure, one process created the folder 123456 correctly with no problems. The other process would appear have created folder 123456, but it actually hadn’t. That didn’t stop the 2nd process from returning a non-existent object id, which was then used by the code for creating the final subfolders as part of the total hierarchy.

The end result was that the code thought everything was okay. The audit trail showed object creation. We could retrieve the record just fine through search. We couldn’t browse to it, but as that was extremely rare to even try, nobody noticed. The issue was non-existent as far as any users were concerned.

At this point, I’ve had my two concurrent “creations”. Now I have third record, 123456881, that wants to live in the same folder structure. It works fine until it looks for folder 12345688 that was created as part of the second process above. A simple check with the Documentum DFC (Documentum Foundation Classes) shows that the folder doesn’t exist. With that fact, the creation of the “missing” folder is initiated. The save of the “new” folder fails because, within the database, that folder DOES exist.

This is all crazy stuff. My mind is just twisting trying to explain it clearly. Let’s talk about what we found.

Database in Chaos

I did some digging and learned a few important things. The folder 12345688 did actually exist. It had two invalid attributes, i_folder_id and i_ancestor_id. They both referenced the non-existent object, ‘123456’, which is the root of the actual problem.

This made the fix simple, enter the database and correct the values for the hierarchy of folders pointing to the invalid folder. I even created a query to find all incidents of this issue:

Select * From dm_folder_r with (NOLOCK)

Where i_ancestor_id Not In

(Select r_object_id From dm_folder_s with (NOLOCK))

And i_ancestor_id IS NOT NULL

* Note that this is from a SQL Server installation and I used the NOLOCK option to keep all queries as Read Only.

This gave me a list of Objects that needed to be fixed. Once I had the list, I could then validate the non-existence of the objects with the following query using the i_ancestor_id as the parameter. You will want to check and double-check everything in this process because you do not want to implement an incorrect fix.

Select *

From dm_sysobject_s with (NOLOCK)

Where r_object_id in ('0b01a47c83447536')

At this point, I retrieve the necessary r_object_id that I need to fix the system.

Select r_object_id

From dm_sysobject_s with (NOLOCK)

Where object_name = '123456'

Using this information I can update the necessary rows. For the ‘12345688’ object, you need to update the i_folder_id in the dm_sysobject_r table. For the both the ‘12345688’ and ‘Record – 123456889’, the i_ancestor_id needs to be updated in the dm_folder_r table. You are essentially replacing the invalid object id with the valid one.

Wrapping It Up

I hope that you never encounter this, but if you do, hopefully this will help you through it. EMC support seemed to indicate that this was limited to SQL Server installs due to database constraint limitations. Given the difficulty in reproducing and testing, they can only hypothesize.

In the meantime, we can fix it. As long as we catch it before, in this example, trying to ingest Record ‘1234567881’, then we will be okay. We can run that first query on a regular basis to find any issues. It does show-up in the data inconsistency report, but this helps us more as we can start working on the solution right away.

I’ve seen a few issues like this over the years where i_ancestor_id, i_chronicle_id or i_antecedent_id getting a little corrupted and you get weird behaviour. The DFC layer seems to hide it pretty well so SQL always ends up becoming your friend.

Nice example of how to debug these kinds of errors though.

LikeLike

Thank you for the post. I’ve been using Documentum for 8+ years now and have seen similar issues and limitations. Can you please clarify….. Did EMC support file this issue as a “bug” and say they were going to address this in a future release of the software? Or is the workaround that you provided their official fix to this issue?

LikeLike

This is what was done while trying to fix the system. By the time we had a case open and working with them, this process had already been followed, though not documented. As for the odds of an official fix, that question was asked.

It is hard to reproduce and anticipate. They offered some coding advice, which essentially was putting the folder creations into their own transaction. This should work for us as having a few excess folder in the hierarchy is not a major concern should the folder creation succeed but the actual Record creation fails, causing a roll-back.

LikeLike

The following query will recompute all the folders and cabinets

update dm_sysobject_s s set r_link_cnt =

(select count(*) from dm_sysobject_r b, dm_sysobject_r a where

b.r_object_id_i = a.r_object_id_i

and

b.r_version_label = ‘CURRENT’

and

a.i_folder_id_i = s.r_object_id_i )

where r_object_id like ‘0c%’ or r_object_id like ‘0b%’;

I posted a document at https://community.emc.com/blogs/dctmreference/2010/07/19/recompute-rlinkcnt-for-folders-and-cabinets

Also there is a script provided by EMC which resides in the server install folder named dm_fix_folders.ebs which contains options to report the broken links and also to fix them.

LikeLike

Thanks for the query, it could be quite useful, but that is one I wouldn’t run in a large repository, especially when it was live. In this instance, link count wasn’t an issue.

LikeLike

These are “fun” issues to solve. Ah, now comes the post-mortum where it can be a bit tricky to assess what *really* is the problem. The concurrency issue is one of your own creation, and something we have all done at least once. Okay, maybe more like ten times. It happens. Fault the developer. The next step is looking at the result of that lock and what the behavior should have been by the system.

Two things pop out for me. The first is that the object id for the missing folder was created, but not committed. I don’t have your code in front of me, but my gut tells me that the issue can be solved by adjusting how the sessions are managed within the thread and some concurrency checks in the code. (yeah, simple for me to say.). That would point to this not necessarily being a bug per se. For EMC to do something about the issue, you’d need to convince them DFC needs some added functionality surrounding concurrent threads. (Which I think it does personally. Heck, everything does.)

Second, once the error occurred it should have been detected sooner. Dm_filescan should have picked this up. This is where you can get EMC Engineering to get involved.

LikeLike

Actually the concurrency is from running the ingestion process on multiple servers to handle the load. Making them aware of each other is a little more costly than we can invest.

I think the bug part comes in the fact that something prevents the object from being created, but only successes are registered. They are capturing something somewhere and letting things by. While ingestion runs on multiple servers, they all leverage the same Content Server.

As a whole, your thoughts are pretty solid and definitely give something else for people to reflect upon.

LikeLike

Folders aren’t versioned, so this problem shouldn’t exist and is an undesirable side effect of inheriting from dm_sysobject. It’s another reason that my happy-fantasy-world dm_sysobject is a super-lightweight object with things like folder location and versioning being interfaces applied as needed instead of inherited en masse like in the real world. Oh well, a boy can dream.

Without more details, it’s hard to give a single best solution, but there are a few approaches to consider when facing this kind of problem:

1. If rollback isn’t required, save early and often when building out the folder structure–basically immediately after each object is created–and always test for existence before creating a new object. This does not guarantee success but should reduce the problem since time-between-saves and commits are factors here.

2. Create a semaphore (a lockable object or some-such) so multiple creation threads don’t try to create the folder structure at the same time. Although it’s ALMOST guaranteed to solve the problem, there’s always a chance that an error will leave the semaphore locked–either because of unexpected termination or even because of something like the alleged underlying database server problem causing the problem in the first place. DB semaphores with timeouts can be even safer depending on how much you trust your RDBMS.

3. Assign new objects to the same “to be filed” folder instead of filing at creation time. Then have a dm_job that takes everything from the folder and files them into its more-permanent locations. Having a single process do the filing (assuming from the context above that creation is more important and time-sensitive than location) removes the problem entirely.

4. A better alternative to the “to be filed” folder would be to queuing filing requests (via workflows, rows in a database, an actual queuing system) and having that single process watch or run from that queue. This would be my favorite in a high-volume scenario: It’s safe and easily tracked. Filing could be done across multiple processes with a balancer that clumps requests by target folder, it’s reboot-safe, etc.

HTH

LikeLike

Thanks for all of the suggestions. We are going with saving early and often balanced with more regular health checks.

LikeLike

P.S.,

I had similar problems under Sybase, but not under Oracle, so I am in no way vindicating SQL Server, but I’d still add the DCML rewrite to the list of suspects.

P.P.S.,

We depended heavily on dm_fix_folders.ebs on that Sybase installation because some code made decisions based on link count, and they were REALLY off in some of the oldest (and most often upgraded) docbases at that client.

LikeLike

Good to see feloow teams facing similar issues . We do face this issue on Oracle as well. We do get the folder object Id’s in Consistency report as well.

LikeLike